A London traveler booked an Airbnb in Manhattan and nearly walked away with a $16,000 bill for damage she never caused. The photos looked damning: cracked countertops, stained furniture, smashed appliances. But closer inspection revealed inconsistencies, warped edges, repeated patterns, signs the images had been altered with AI. The guest fought back, proved the photos were fake, and Airbnb eventually refunded her.

That case grabbed headlines, but it isn’t just an isolated incident. The short-term rental industry is facing a surge in scams supercharged by generative AI. What once required technical skill can now be done instantly with off-the-shelf tools, making fake listings, doctored photos, and AI-written reviews harder than ever to spot.

For professional managers, this isn’t only about guests being tricked. It’s about how scams erode trust, push platforms to tighten policies, and raise the bar for proving legitimacy.

AI Airbnb Scams: How Scams are Powered

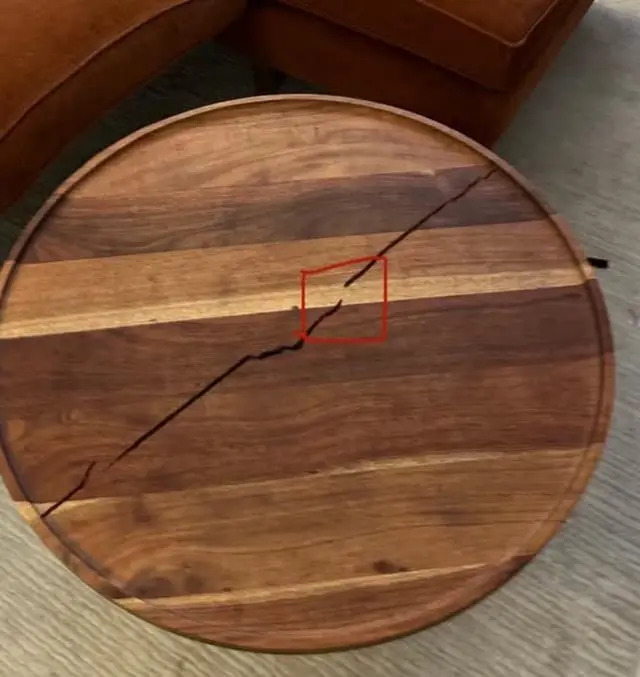

1. Doctored Damage Claims

AI image tools make it possible to fabricate “evidence.” In the Manhattan case, altered images almost forced a guest to pay $16,000 in damages. This kind of manipulation shows how disputes can spiral when images can no longer be trusted.

Why it matters for managers: If guests adopt the same tactics, operators could face fraudulent claims, longer resolution timelines, and increased exposure to penalties.

2. Fake Listings and Reviews

Scammers use AI to generate realistic property photos, build host personas, and churn out hundreds of “authentic”-sounding reviews. A few keystrokes can create a listing that looks more professional than the real thing.

Airbnb’s own research with Get Safe Online underlines how convincing this content has become: nearly two thirds of Brits struggle to tell AI-generated property images apart from real ones. More than a third mistook fakes for genuine photos, while another quarter weren’t sure.

Why it matters for managers: Copycat scams can damage trust in entire markets. A fraudulent twin of your property could circulate with fake reviews, making it harder for legitimate operators to win bookings.

3. Social Media Spin-Offs

On Instagram and TikTok, scammers post glossy, AI-crafted rentals with influencer-style captions. The aim is simple: lure travelers into paying off-platform, where protections don’t apply.

And younger travelers are the most exposed. Airbnb’s UK research shows:

- 39% of Gen Z say they’d pay by bank transfer if it saved them money.

- 43% of Gen Z would book directly via social media.

- More than a third would trust a celebrity or influencer endorsement enough to make a large booking.

Why it matters for managers: Even authentic marketing efforts on social channels now face higher skepticism. Guests burned once are less likely to trust even genuine ads.

When Automation Looks Like a Scam

A Reddit thread recently flagged an Airbnb listing as a possible AI scam because it looked too perfect. Users pointed to:

- AI-style host photos

- Copy-paste guest replies that read like chatbot scripts

- Suspicious company names not tied to real locations

- Doctored photos with added logos and “enhancements”

- Review anomalies like dozens of five-star ratings on a brand-new listing

Here’s the irony: some of these traits are also signs of professionalization. Many managers automate guest messaging, maintain consistent review scores, and outsource photography. But in 2025, guests increasingly conflate efficiency with fraud.

Takeaway for managers:

- Add personal touches to automated messages.

- Be transparent about your company identity.

- Audit your listings for “robotic” feel: too much polish can raise red flags.

How Airbnb Fights Back

Airbnb highlights its AI-driven fraud detection tools:

- Behavior analysis to catch mismatched booking patterns.

- Risk scoring for new accounts making high-value reservations.

- Automated scanning for duplicate or suspiciously perfect listings.

- Awareness campaigns encouraging safe booking and reporting scams.

In 2024 alone, Airbnb says it detected and took down more than 3,200 third-party phishing domains. Still, the race is ongoing. As scammers adopt AI, Airbnb tightens rules, and managers feel the ripple effect. Vrbo’s recent move to impose 100% penalties for failed check-ins is one example of OTAs justifying harsher enforcement in the name of fraud prevention.

Beyond Airbnb: Booking.com Sees a 900% Surge in Scams

The problem spans the entire travel sector. Booking.com’s internet safety chief, Marnie Wilking, warned in 2024 that travel scams have risen 500–900% in just 18 months, largely due to generative AI. Phishing attempts, fake booking links, and fraudulent listings have become far harder to detect because AI can generate polished, multilingual emails and realistic images at scale.

“The attackers are definitely using AI to launch attacks that mimic emails far better than anything that they’ve done to date,” Wilking said.

For platforms, this means training AI models to catch and remove fake listings before they reach guests. For managers, it means arriving guests may already be skeptical, conditioned by headlines about scams and wary of too-good-to-be-true deals.

The Bigger Picture for Managers

For professional property managers, AI scams aren’t just a guest problem. They ripple across the ecosystem:

- Tighter OTA policies — justified as anti-scam measures, but applied broadly.

- Greater scrutiny — more ID checks, more verification hurdles.

- Trust erosion — guests second-guessing even legitimate listings.

And the financial impact is growing. Airbnb’s UK research found that travelers who’ve been scammed lose an average of £1,937, a figure climbing every year.

In other words, even if you play by the book, you’ll feel the impact.

Bottom Line

AI is transforming the scam landscape in short-term rentals. For travelers, the risks are fake listings, doctored photos, and off-platform lures. For managers, the risks are tougher OTA rules, higher verification barriers, and a trust gap that automation alone can’t fix.

The safest path in 2025 is vigilance plus transparency: use AI tools for efficiency, but layer in human presence to maintain trust. Guests don’t just want flawless listings, they want reassurance there’s a real person behind them.

Uvika Wahi is the Editor at RSU by PriceLabs, where she leads news coverage and analysis for professional short-term rental managers. She writes on Airbnb, Booking.com, Vrbo, regulations, and industry trends, helping managers make informed business decisions. Uvika also presents at global industry events such as SCALE, VITUR, and Direct Booking Success Summit.